AMD’s new CPU hits 132fps in Fortnite without a graphics card::Also get 49fps in BG3, 119fps in CS2, and 41fps in Cyberpunk 2077 using the new AMD Ryzen 8700G, all without the need for an extra CPU cooler.

I have routinely been impressed with AMD integrated graphics. My last laptop I specifically went for one as it meant I didn’t need a dedicated gpu for it which adds significant weight, cost, and power draw.

It isn’t my main gaming rig of course; I have had no complaints.

Same. I got a cheap Ryzen laptop a few years back and put Linux on it last year, and I’ve been shocked by how well it can play some games. I just recently got Disgaea 7 (mostly to play on Steam Deck) and it’s so well optimized that I get steady 60fps, at full resolution, on my shitty integrated graphics.

I have a Lenovo ultralight with a 7730U mobile chip in it, which is a pretty mid cpu… happily plays minecraft at a full 60fps while using like 10W on the package. I can play Minecraft on battery for like 4 hours. It’s nuts.

AMD does the right thing and uses their full graphics uArch CU’s for the iGPU on a new die, instead of trying to cram some poorly designed iGPU inside the CPU package like Intel does.

AMD’s integrated GPUs have been getting really good lately. I’m impressed at what they are capable of with gaming handhelds and it only makes sense to put the same extra GPU power into desktop APUs. This hopefully will lead to true gaming laptops that don’t require power hungry discrete GPUs and workarounds/render offloading for hybrid graphics. That said, to truly be a gaming laptop replacement I want to see a solid 60fps minimum at at least 1080p, but the fact that we’re seeing numbers close to this is impressive nonetheless.

I was sold on AMD once I got my Steamdeck.

Everything I see about AMD makes me like them more than Intel or Nvidia (for CPU and GPU respectively). You can’t even use an Nvidia card with Linux without running into serious issues.

Only downside if integrated graphics becomes a thing is that you can’t upgrade if the next gen needs a different motherboard. Pretty easy to swap from a 2080 to a 3080.

Integrated graphics is already a thing. Intel iGPU has over 60% market share. This is really competing with Intel and low-end discrete GPUs. Nice to have the option!

Yeah, I know integrated graphics is a thing. And that’s been fine for running a web browser, watching videos, or whatever other low-demand graphical application was needed for office work. Now they’re holding it up against gaming, which typically places large demands on graphical processing power.

The only reason I brought up what I did is because it’s an if… if people start looking at CPU integrated graphics as an alternative to expensive GPUs it makes an upgrade path more costly vs a short term savings of avoiding a good GPU purchase.

Again, if one’s gaming consists of games that aren’t high demand like Fortnite, then upgrades and performance probably aren’t a concern for the user. One could still end up buying a GPU and adding it to the system for more power assuming that the PSU has enough power and case has room.

AMD has been pretty good about this though, AM4 lasted 2016-2022. Compare to Intel changing the socket every 1-2 years, it seems.

Actually AMD is still releasing new AM4 CPUs now. 5700x3D was just announced.

Oh, now that sounds like something I might like

I don’t have the fastest RAM out there, so whenever I upgrade from my 1600, I want an X3D variant to help with that

Do it!

There’s a 5600x3D s well.

I think I’m gonna get one of the higher end models, since it’ll be the best possible upgrade I can do on my motherboard.

So 5700X3D or 5800X3D, depending on what the prices look like whenever I’m gonna be in the marlet for them.

But it’s pretty cool that they made a 5600 variant too. Might as well use the chips they evidently still have left over

That’s true but I’m excited about the future of laptops. Some of the specs are getting really impressive while keeping low power draw. I’m currently jealous of what Apple has accomplished with literal all day battery life in a 14inch laptop. I’m hopeful some of the AMD chips will get us there in other hardware.

Could you not just slot in a dedicated video card if you needed one, keeping the integrated as a backup?

Yeah, maybe. I commented on that elsewhere here. If we follow a possible path for IG - the elimination of a large GPU could result in the computer being sold with a smaller case and lower-power GPU. Why would you need a full tower when you can have a more compact PC with a sleek NVMe/SSD and a smaller motherboard form factor? Now there’s no room to cram a 3080 in the box and no power to drive it.

Again, someone depending on CPU IG to play Fortnite probably isn’t gonna be looking for upgrade paths. this is just an observation of a limitation imposed on users should CPU IG become more prominent. All hypothetical at this point.

Or y’know, upgrade the case at the same time.

Or even build the computer yourself. Outside of the graphics card shortage a couple of years back, it’s usually been cheaper to source parts yourself than pay an OEM for a prebuilt machine.

A small side note: If you buy a Dell/Alienware machine, you’re never upgrading just the case. The front panel IO is part of the motherboard, and the power supply is some proprietary crap. If you replace the case, you need to replace the motherboard, which also requires you to replace the power supply. At that point, you’ve replaced half the computer.

Same thing with HP. Their “Pavillion” series of Towers contains a proprietary motherboard and power supply. Also, on the model a friend of mine had, the CPU was AMD, but the cooler scewed on top was designed for intel-purposed boards, so it looked kinda frankensteined.

So in essence, it’s the same with HP.

Oh, oh ok I thought one of the new Threadrippers is so powerful that the CPU can do all those graphics in Software.

It’s gonna take decades to be able to render 1080p CP2077 at an acceptable frame rate with just software rendering.

It’s all software, even the stuff on the graphics cards. Those are the rasterisers, shaders and so on. In fact the graphics cards are extremely good at running these simple (relatively) programs in an absolutely staggering number of threads at the same time, and this has been taken advantage of by both bitcoin mining and also neural net algorithms like GPT and Llama.

So will this be a HTPC king? Kind of skimped on the temps in the article. I assume HWU goes over it and will watch it soon.

What services use the graphics card and are fine with the low end?

Common W for AMD

deleted by creator

The good news is, Framework is shipping with AMD CPUs now. :)

Currently 7th gen Ryzens, not sure when the 8th gens become available.

For people like me who game once a month, and mostly stupid little game, this is great news. I bet many people could use that, it would reduce demand for graphic card and allow those who want them to buy cheaper.

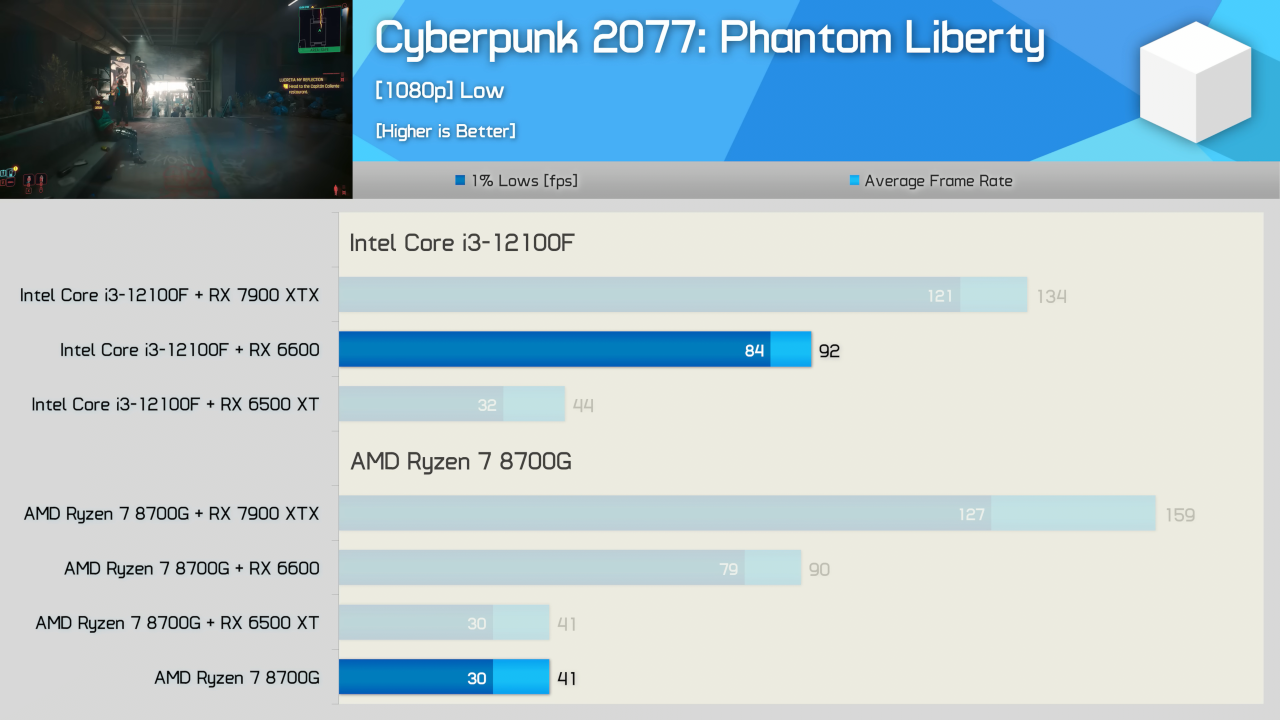

Mind you that it can get these frame rates at the low setting. While this is pretty damn impressive for a APU, it’s still a very niche market type of APU at this point and I don’t see this getting all that much traction myself.

I think the opposite is true. Discrete graphics cards are on the way out, SoCs are the future. There are just too many disadvantages to having a discrete GPU and CPU each with it’s own RAM. We’ll see SoCs catch up and eventually overtake PCs with discrete components. Especially with the growth of AI applications.

That’s pretty damn impressive. AMD is changing the game!

Meh. It’s also a $330 chip…

For that price you can get a 12th gen i3/RX6600 combination which will obliterate this thing in gaming performance.

Your i3 has half the cores. Spending more on GPU and less on CPU gives better fps, news at 11.

So what’s the point of this thing then?

If you just want 8 cores for productivity and basic graphics, you’re better off getting a Ryzen 7 7700, which is not gimped by half the cache and less than half the PCIe bandwith and for gaming, even the shittiest discrete GPUs of the current generation will beat it if you give it a half decent CPU.

This thing seems to straddle a weird position between gaming and productivity, where it can’t do either really well. At that pricepoint, I struggle to see why anyone would want it.

It’s like that old adage: there are no bad CPUs only bad prices.

which is not gimped by half the cache and less than half the PCIe bandwith

Half L3, yes. 24 vs. 16 (available) PCIe lanes that’s not half, still enough for two SSDs and a GPU, if you actually want IO buy a threadripper. The 8700G has quite a bit more baseclock, 7700 boosts higher but you can forget about that number with all-core loads. About 9 times raw iGPU TFLOPs.

Oh, those TFLOPs. 4.5 vs. my RX 5500’s 5 (both in f32), yet in gaming performance mine pulls significantly ahead, must be memory bandwidth. Light inference workloads? VRAM certainly won’t be an issue just add more sticks. Those TFLOPS will also kill BLAS workloads dead so scientific computing is an option.

Can’t find proper numbers right now but the 7700 should have a total of about half a TFLOP CPU and half a TFLOP GPU.

So, long story short: When you’re an university and a prof says “I need a desktop to run R”, that’s the CPU you want.

24 vs. 16 (available) PCIe lanes that’s not half

24x PCIe 5.0 vs 16x PCIe 4.0

So 8 lanes less and each lane has half the bandwith = less than half the PCIe bandwidth.

I hope red and blue both find success in this segment. Ideally the strengthened APU share of the market exerts pressure on publishers to properly optimize their games instead of cynically offloading the compute cost onto players.

Hell yeah, I want EVERYONE to make dope ass shit. I’ve made machines with both sides, and I hate tribal…ness. My current machine is a 9900k that’s getting to be… five years old?! I’d make an AMD machine today if I needed a new machine. AMD/Intel rivalry is so good for us all. Intel slacked so hard after the 9000-series. I hope they come back.

Intel has slacked hard since the 2000-series. One shitty 4 core release after another, until AMD kicked things into gear with Ryzen.

And during that time you couldn’t buy Intel due to security flaws (Meltdown, Spectre, …).

Even now they are slacking, just look at the power consumption. The way they currently produce CPUs isn’t sustainable (AMD pays way less per chip with the chiplet design and is far more flexible).

I wonder how well it does AI workloads.

Aaaaand the 7950x3D is not top tier anymore

Back in my day the 7950 was a GPU!

Yelling at clouds

The playstation 5 also does this.

deleted by creator